shuhongchen

shuhong (shu) chen

陈舒鸿

site navigation

home

research publications

experience

top anime

artwork

info

email: shu[a]dondontech.com

web: shuhongchen.github.io

github: ShuhongChen

myanimelist: shuchen

linkedin: link

cv: latest

academic

google scholar: TcGJKGwAAAAJ

orcid: 0000-0001-7317-0068

erdős number: ≤4

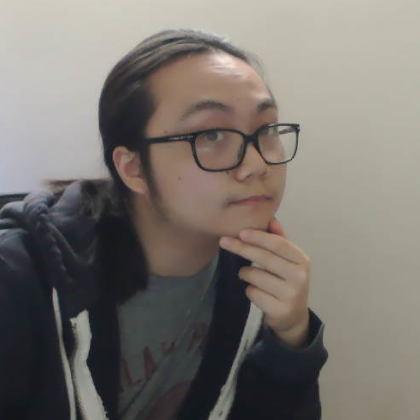

shu

I’m a computer vision/graphics researcher focused on bringing assistive AI tooling to artists, particularly creators working on anime-style content.

In 2024, I founded Dondon Technologies, a startup providing AI assistance to traditional 2D animators using the Japanese anime pipeline.

( ➤ publications page )

I did my PhD at the Univ. of Maryland - College Park with Prof. Matthias Zwicker; my thesis was on ML for animation, illustration, and 3D characters.

( ➤ experiences page )

I’ve also collaborated outside academia, with both the tech and anime industries (TikTok, Meta, OLM Digital, Arch Inc., etc.).

( ➤ anime page )

I just so happen to like anime; my favorite is Nichijou (2011). To trash on my taste, please see my mal or my top anime page.

( ➤ artwork page )

I can draw a bit (not very well). Despite being a researcher in AI+art, I don’t use ML models for my own artwork; they still don’t do what I want…

research areas

AI-assisted animation. By studying animation industry practices, scaling data pipelines, bridging domain gaps, leveraging 3d priors, etc., I hope to uncover what AI can do for animation.

3d character modeling. My work tries to democratize 3d character creation, bringing customizable experiences to the next generation of social interaction.

Deep rendering. I firmly believe graphics is the future of computer vision. As such, I’m also interested in new 3d representations and rendering techniques.