shuhongchen

shuhong (shu) chen

陈舒鸿

site navigation

home

research publications

experience

top anime

artwork

info

email: shu[a]dondontech.com

web: shuhongchen.github.io

github: ShuhongChen

myanimelist: shuchen

linkedin: link

cv: latest

academic

google scholar: TcGJKGwAAAAJ

orcid: 0000-0001-7317-0068

erdős number: ≤4

back to home

AI in my art

➤ tl;dr just show me the pictures

2024-04

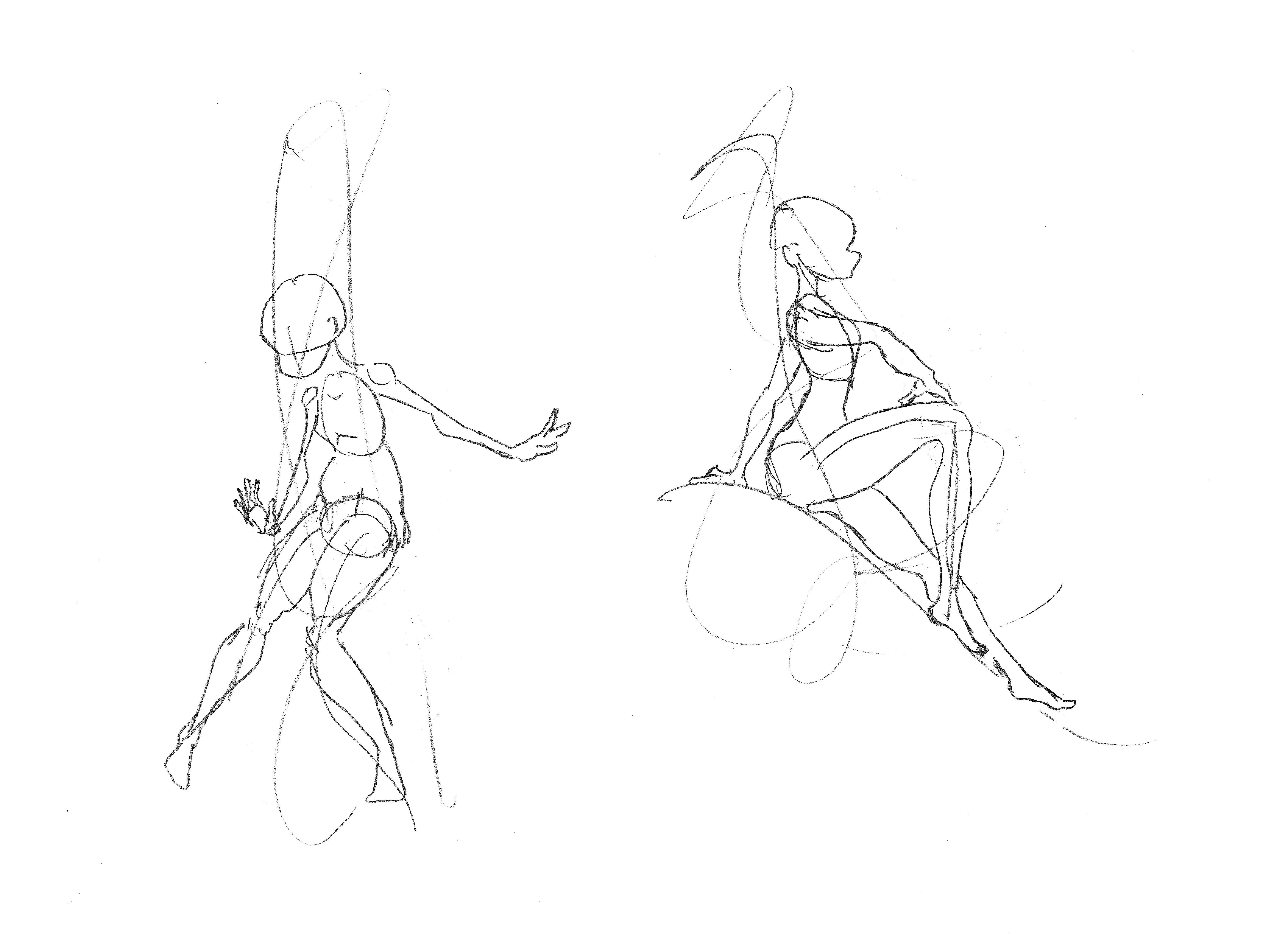

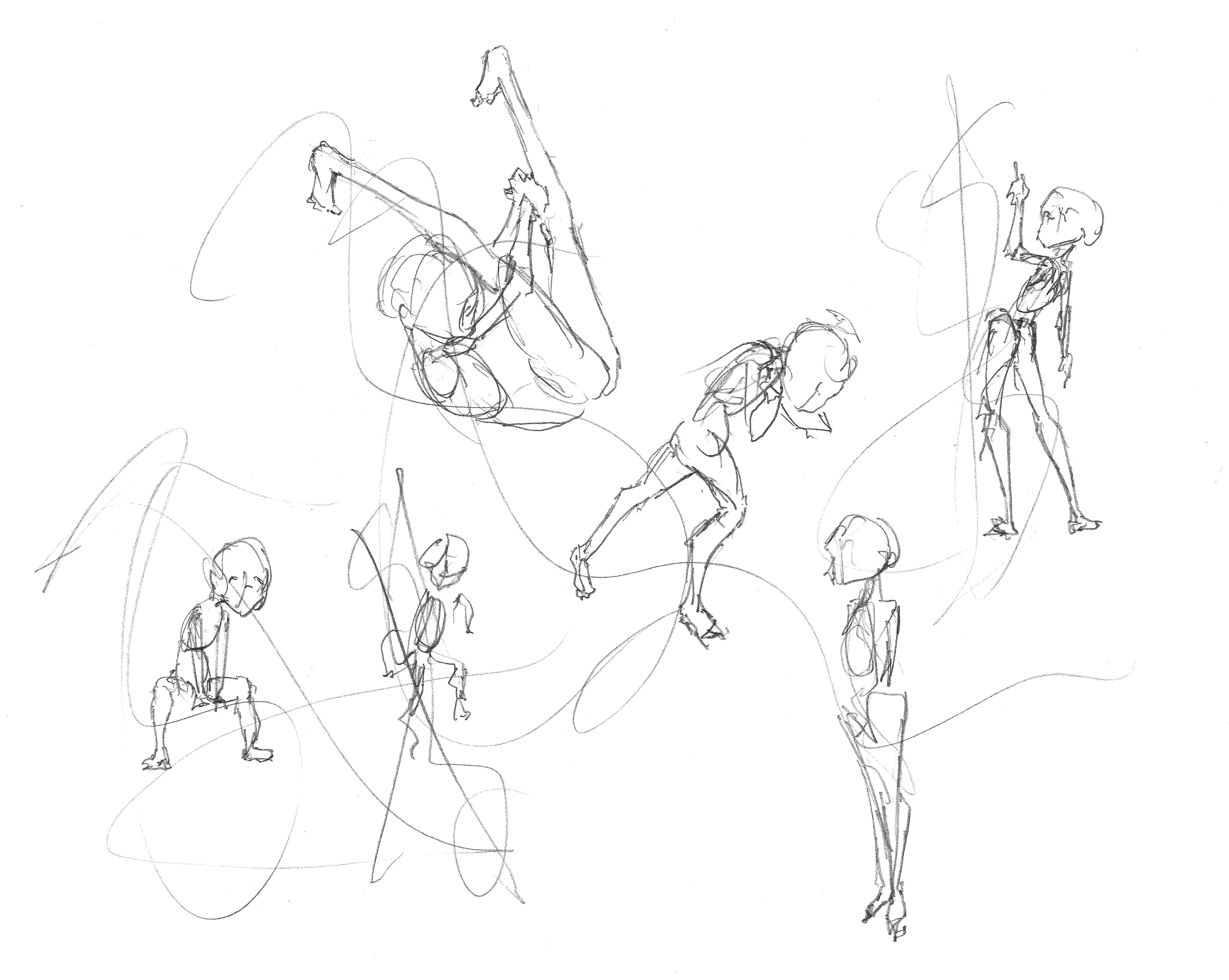

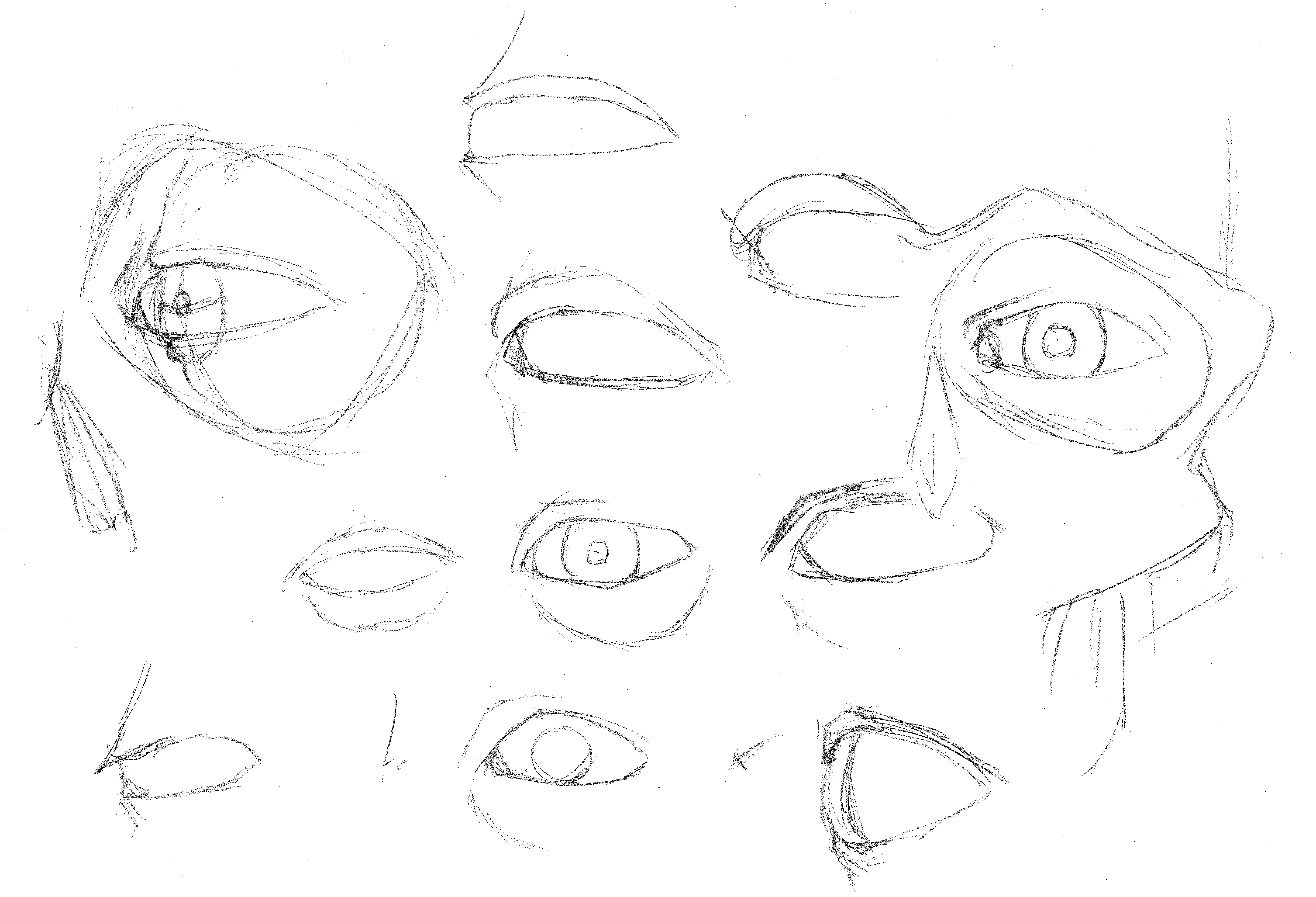

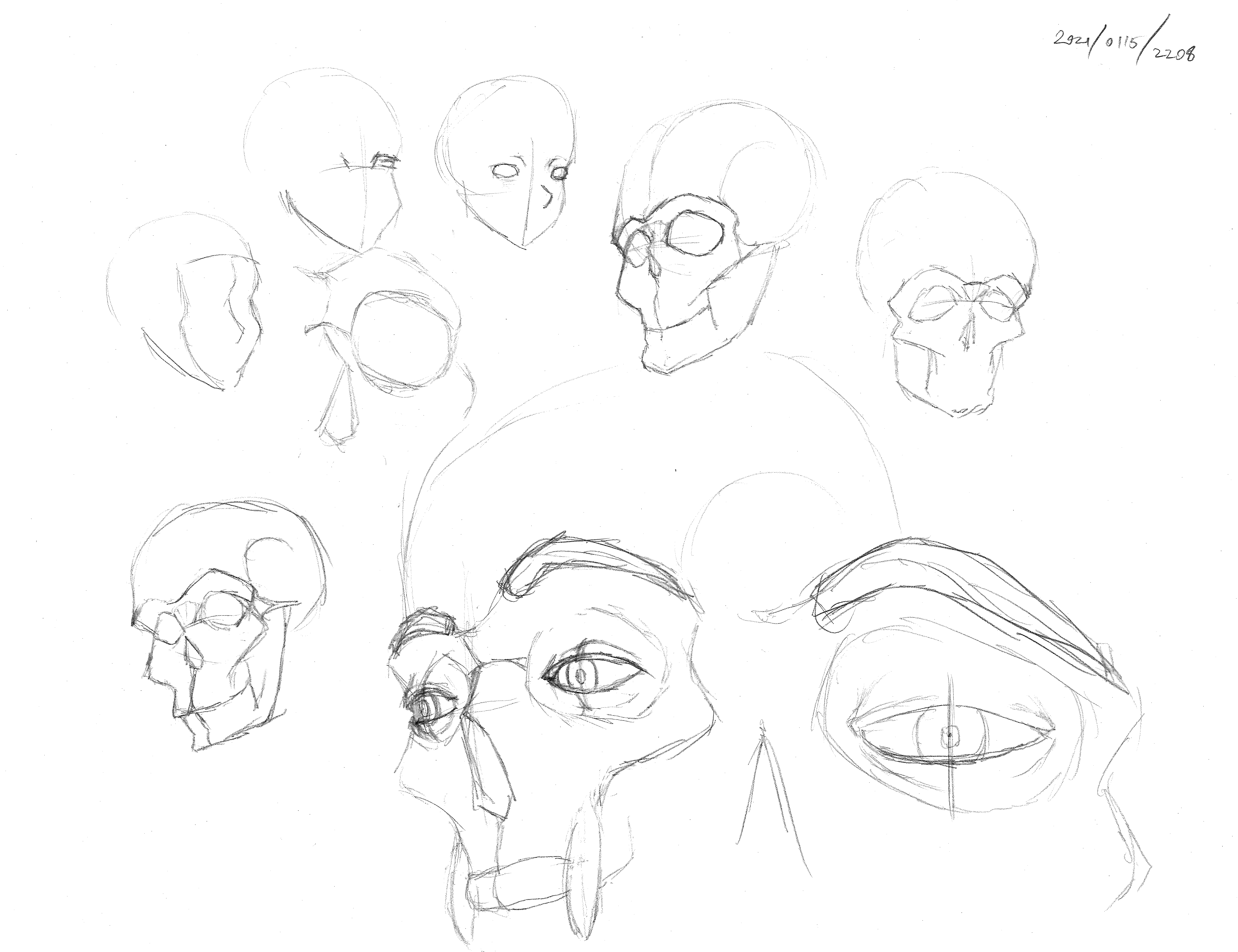

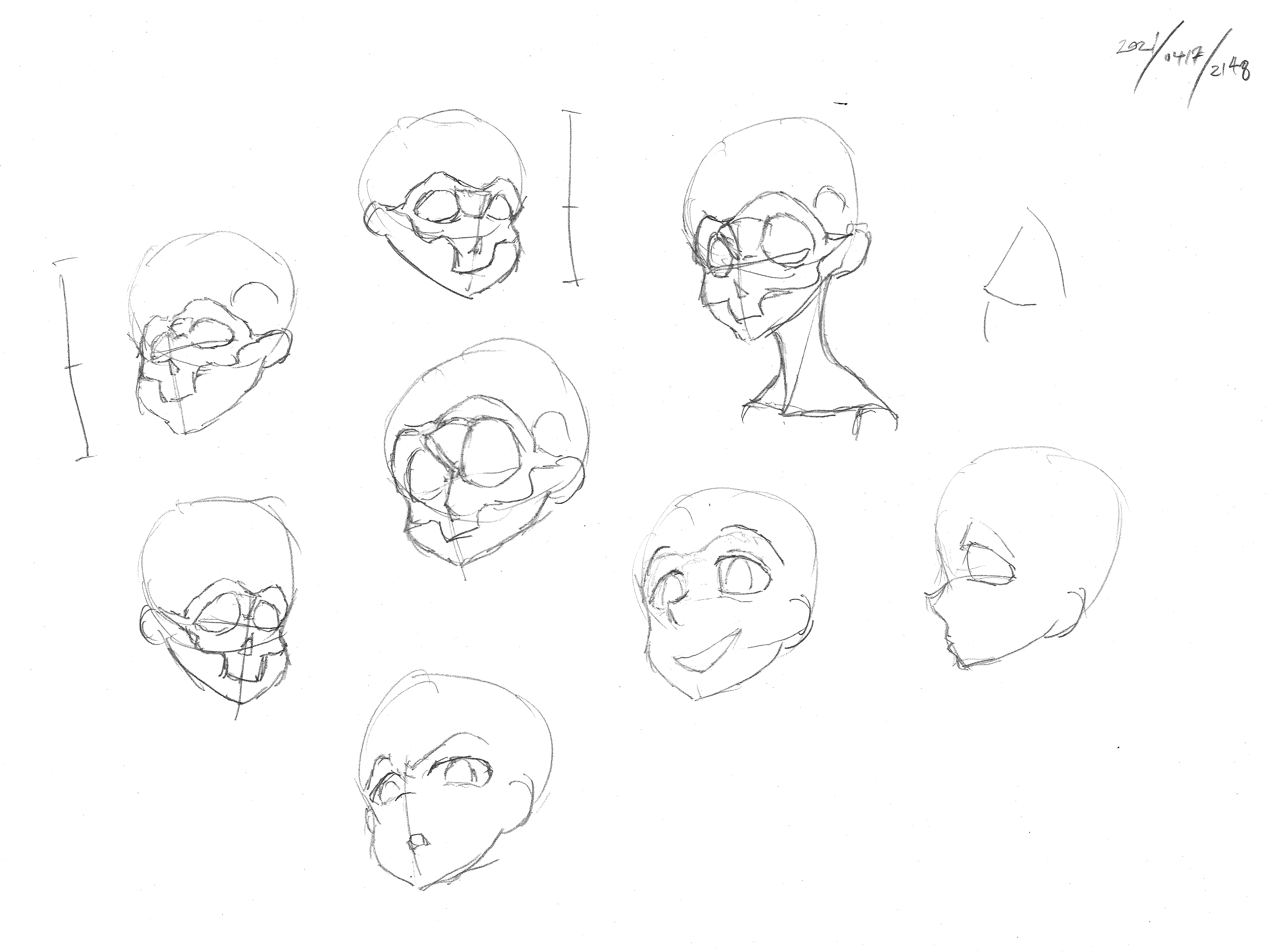

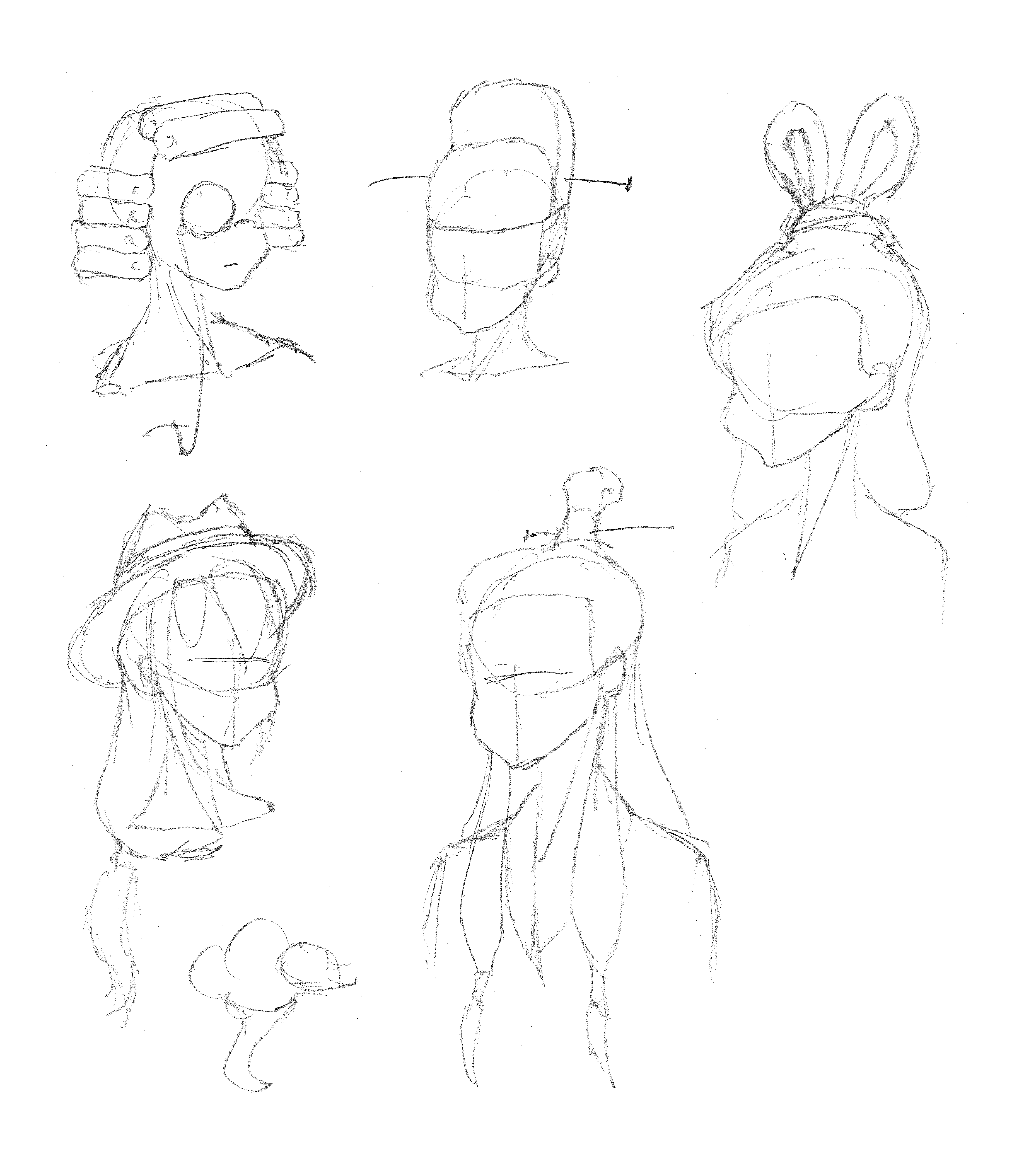

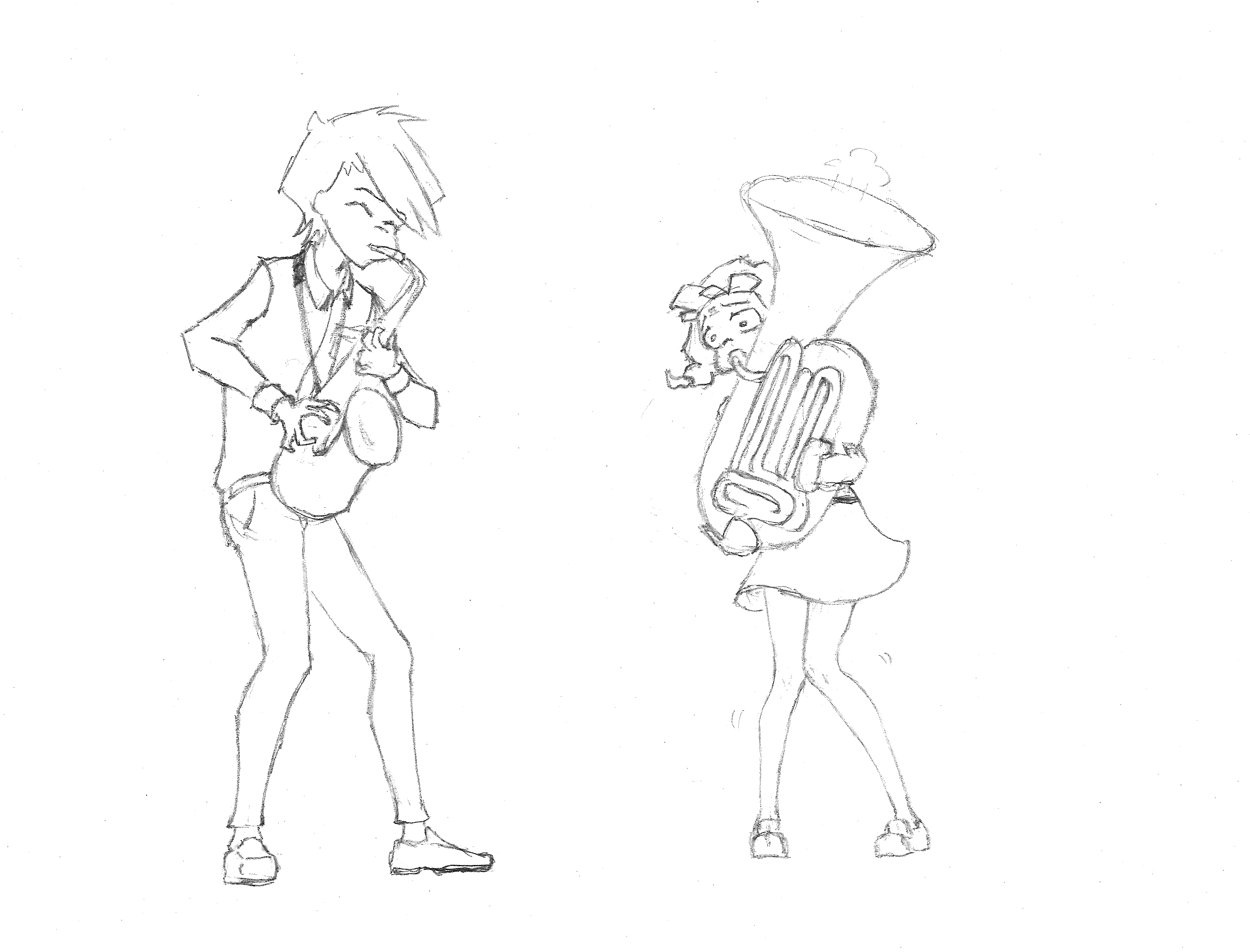

I only really draw for fun, either just to render an interesting idea I have, or to figure out which parts of a character design make it attractive. It usually only takes a couple strokes into the first sketch, for me to remember why I do computer science instead.

And this is just for black-and-white character sketches. I’ve done some cleaning and coloring, but the final versions tend to make my lack of fundamentals even more apparent. Animation is in a completely different league of hurt.

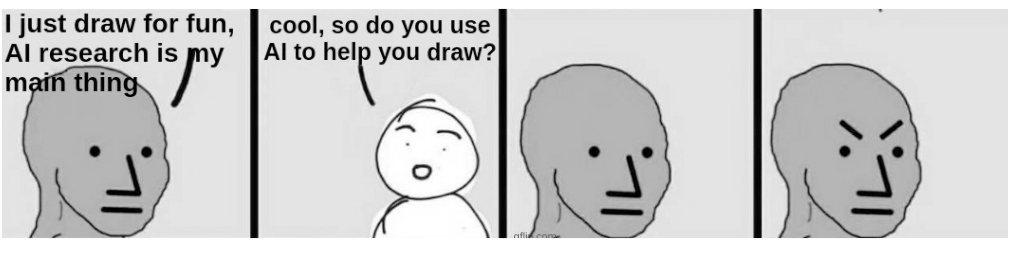

I’ve tried using AI for some of my work, but never found it useful for what I want to do (usually character art). Text models never get the character concept in my mind, and can’t carry over the parts it got right to the next iteration. Sketch cleanup methods only understand the “roughness” they were trained for, not the specific way my sketches need cleaning. Colorizers conflate coloring with effects, ignoring everything I want to invent things nobody asked for. I’ll put effort into running hacky research code, with barely functional (if existant) GUIs, only to find they solve problems different from mine.

Figuring out AI tooling just feels like a big distraction from the drawing I wanted to make. As a casual, I’m not under any pressure to accelerate my workflow; as an amateur, my process isn’t worth optimizing anyway. I’d much rather spend my leisure time actually drawing the things I like.

I still can’t draw digitally for the same reason; I’m here to figure out the visual mechanism by which a character affects our hearts, not the hotkeys… As of this writing, all my artwork is still on paper.

However, this is not to say that AI will never be useful to my art, nor to discredit the huge recent advancements in image generation. Most of the amazing new methods are good for folks who don’t know what they want, not for people who know what they need. Systems built for the latter group simply haven’t caught up yet.

Speaking more broadly about AI art, creating such systems for users needing high specificity is hard. Sure there’s technical challenges, but I personally think the bigger barrier is communication between artists and researchers.

We researchers would need to *gasp* touch grass and interact with actual artists, instead of squatting in an ivory vacuum claiming to solve invented art challenges. I encourage artists to set aside their prejudices and objectively understand the ways AI could help their own workflows, rather than giving in to general fearmongering.

Progress on these tools will only advance with open-mindedness and cooperation from both sides. I’m incredibly greatful to be with artists who very patiently help me understand their work, and actively collaborate to improve the creative process.

Back to my personal use cases, I’ve for now decided to put aside developing AI for my own art, in favor of first supporting the people who actually know what they’re doing and are producing content in the medium that I love.

In the future I might find enough commitment to draw my characters and stories onto a manga or something. Maybe then out of necessity I’ll finally figure out how to use hotkeys and AI.

Enough text, let’s see some pictures

artwork

fanart

shiki spoiler

original

exercises